Load Balancing Application Servers

In the world of managing high-traffic websites and applications, ensuring seamless performance and availability is paramount. When it comes to handling the increasing load balancing application servers, implementing effective load balancing strategies becomes essential. As an experienced blogger in the tech industry, I’ve seen firsthand the impact that well-executed load balancing application servers can have on optimizing server resources and enhancing user experience.

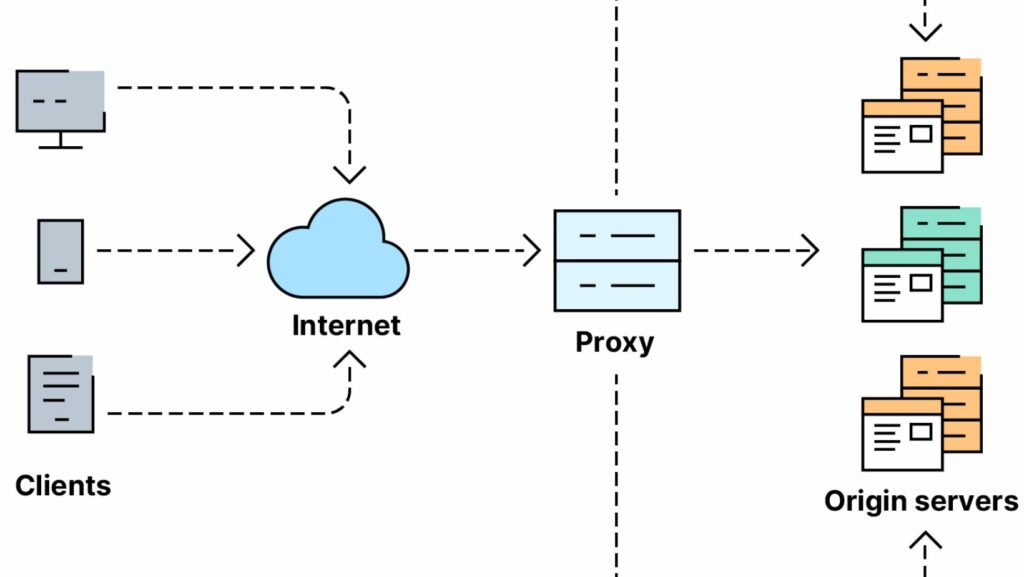

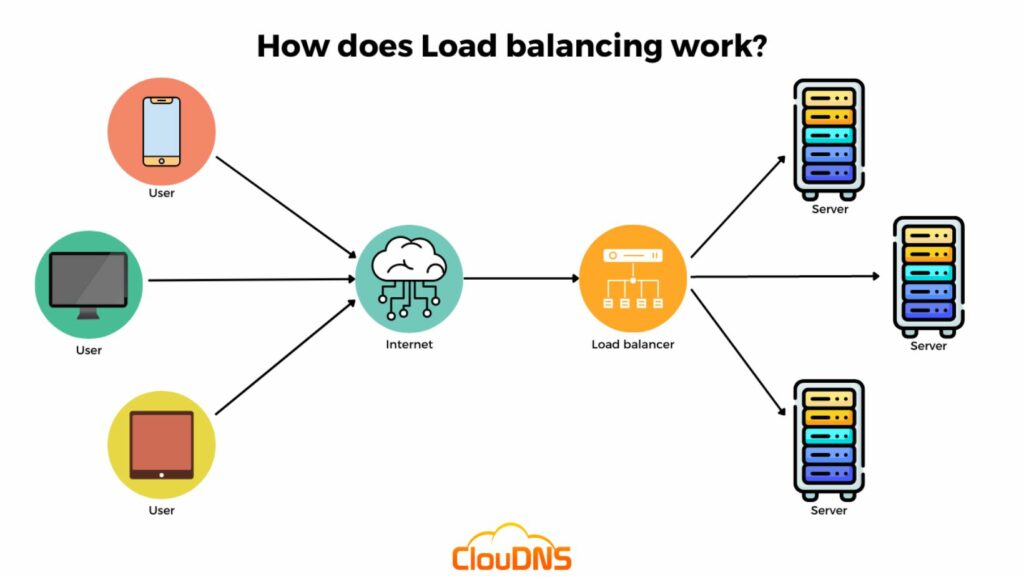

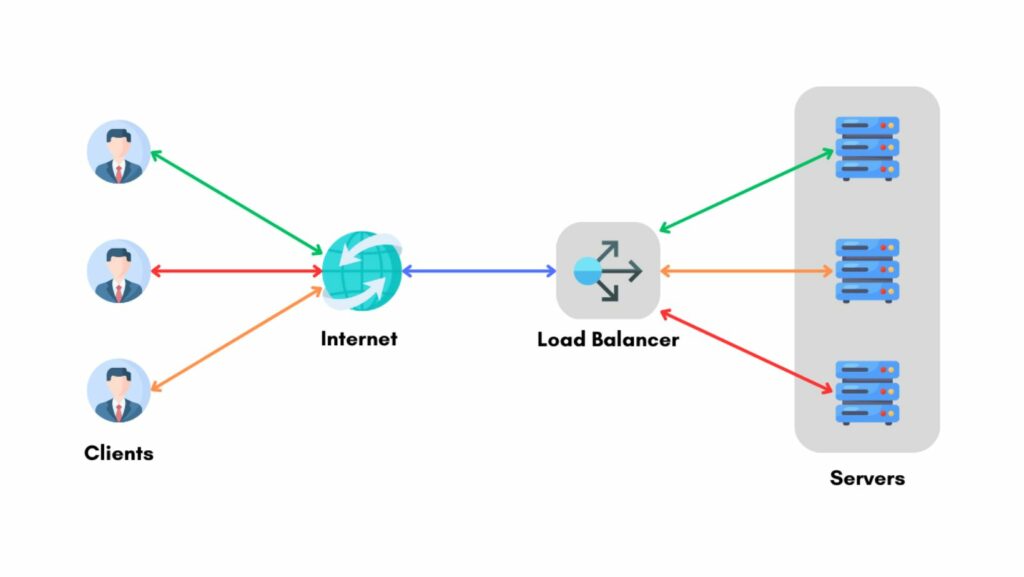

Load balancing plays a crucial role in distributing incoming network traffic across multiple servers, preventing any single server from becoming overwhelmed. By evenly distributing workloads, it helps maintain stability, minimize downtime, and improve overall system efficiency. Join me as we delve deeper into the intricacies of load balancing application servers and explore the best practices for achieving optimal performance and reliability in today’s dynamic digital landscape.

Understanding Load Balancing for Application Servers

Load balancing is a critical process in distributing incoming network traffic across multiple servers. It ensures that no single server is overwhelmed with requests, thereby optimizing resource utilization and preventing downtime. In essence, load balancing acts as a traffic manager, enhancing the performance and reliability of application servers by efficiently handling user requests and traffic spikes.

Load balancing is essential for application servers to maintain high availability and scalability. By evenly distributing traffic across servers, it helps in improving performance, minimizing response times, and enhancing the overall user experience. Additionally, load balancing enables applications to handle increased loads without compromising on speed or functionality, making it a fundamental component for ensuring seamless operations in dynamic digital environments.

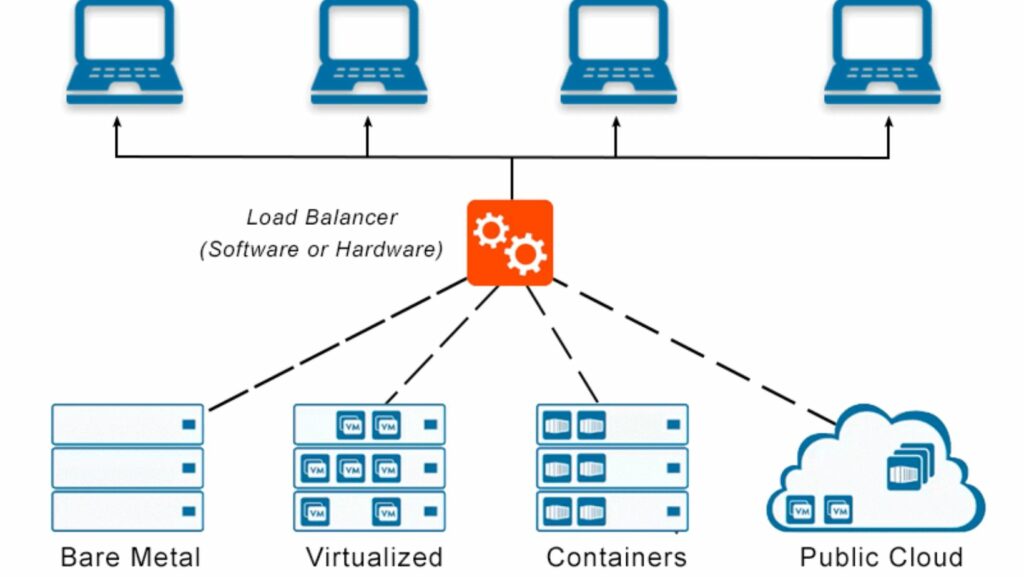

When considering load balancing strategies for application servers, it’s crucial to evaluate the differences between hardware and software load balancers. Hardware load balancers are physical devices specifically designed for distributing traffic across servers efficiently. They offer high performance and reliability but can be expensive. On the other hand, software load balancers are applications that run on standard servers, providing flexibility and cost-effectiveness. Understanding the distinct features of each type helps in choosing the most suitable option based on the specific requirements of the system.

In load balancing application servers, the Round-Robin technique serves as a fundamental method for distributing incoming traffic. This strategy rotates through a list of servers and assigns each new connection to the next server in line. It ensures an equitable distribution of requests among the servers, preventing any single server from being overwhelmed. Implementing the Round-Robin technique optimizes resource utilization and promotes fairness in handling user requests, contributing to enhanced system performance and reliability.

Another essential strategy for load balancing application servers is the Least Connections method. This approach directs incoming requests to the server with the fewest active connections at any given time. By dynamically distributing traffic based on the current load of each server, the Least Connections method helps in maintaining balanced server loads and efficient utilization of resources. This results in improved response times, scalability, and overall system performance, making it a valuable technique for ensuring optimal user experience and system reliability.

Implementing Load Balancing in Different Environments

Load Balancing in Cloud Environments

In cloud environments, implementing load balancing is crucial for distributing incoming network traffic efficiently across virtual servers. Cloud-based load balancers offer scalability, flexibility, and high availability by dynamically adjusting resources based on traffic demands. Utilizing cloud load balancers ensures optimal performance, minimizes response times, and enhances the overall user experience in dynamic digital ecosystems.

Load Balancing On-Premise Servers

When deploying load balancing on-premise servers, organizations benefit from greater control over their network infrastructure. Load balancers installed on physical servers optimize resource allocation, improve server performance, and ensure high availability by evenly distributing traffic loads.

On-premise load balancing solutions are customizable to meet specific performance requirements and can effectively manage server traffic spikes, leading to enhanced system stability and user satisfaction.

SSL Termination and SSL Offloading

When it comes to advanced load balancing, SSL termination and SSL offloading play a crucial role in optimizing server performance. SSL termination involves decrypting SSL/TLS-encrypted traffic at the load balancer instead of the backend servers. By offloading the SSL decryption process from the servers to the load balancer, it reduces the computational burden on the servers, leading to improved efficiency in handling secure connections.